We survey 125+ representative studies and identify six main paradigms of AI evaluation, defined by specific objectives, methodologies, and assumptions. To operationalise this framework, we annotate these studies along key dimensions, delineating the unique questions and approaches each paradigm tackles. This gives researchers appreciation of the extent of AI evaluation and allows them to build bridges between insular research trajectories and identify gaps.

| Dimension | Benchmarking | Evals | Construct-Oriented | Exploratory | Real-World Impact | TEVV |

|---|---|---|---|---|---|---|

| Archetype | Deng et al. (2009) | Ganguli et al. (2022) | Guinet et al. (2024) | Berglund et al. (2024) | Collins et al. (2024) | Yang et al. (2023) |

| Indicators | Performance | Safety/Fairness | - | Performance/Behaviour | Cost/Fairness/Performance | Safety/robustness and reliability |

| Dist. summ. | Aggregate | Extreme/Aggregate | Functional | Aggregate/Manual Inspection | Aggregate | Extreme |

| Subject | - | System | System | System | System | System |

| Measurement | Observed | Observed | Latent | Observed | Observed | Observed |

| Task origin | - | Design | Design | Design | - | Design/Operation |

| Protocol | Fixed | Interactive | - | - | - | - |

| Reference | Objective | Subjective | - | - | Subjective/Rubric | Objective |

| Task Mode | - | Generation | - | - | Generation | - |

| Evaluators | Researchers | Researchers, Deployers | Researchers | Researchers | - | - |

| Motivation | Comparison | Assurance/Understanding | Understanding | Understanding | Comparison | Assurance |

| Disciplines | - | Security, Bio | Cognitive Science | - | Social Sciences | Control Theory |

| Raw Number | 72 | 13 | 15 | 18 | 4 | 10 |

| Percentage | 57% | 10% | 12% | 14% | 3.2% | 7.9% |

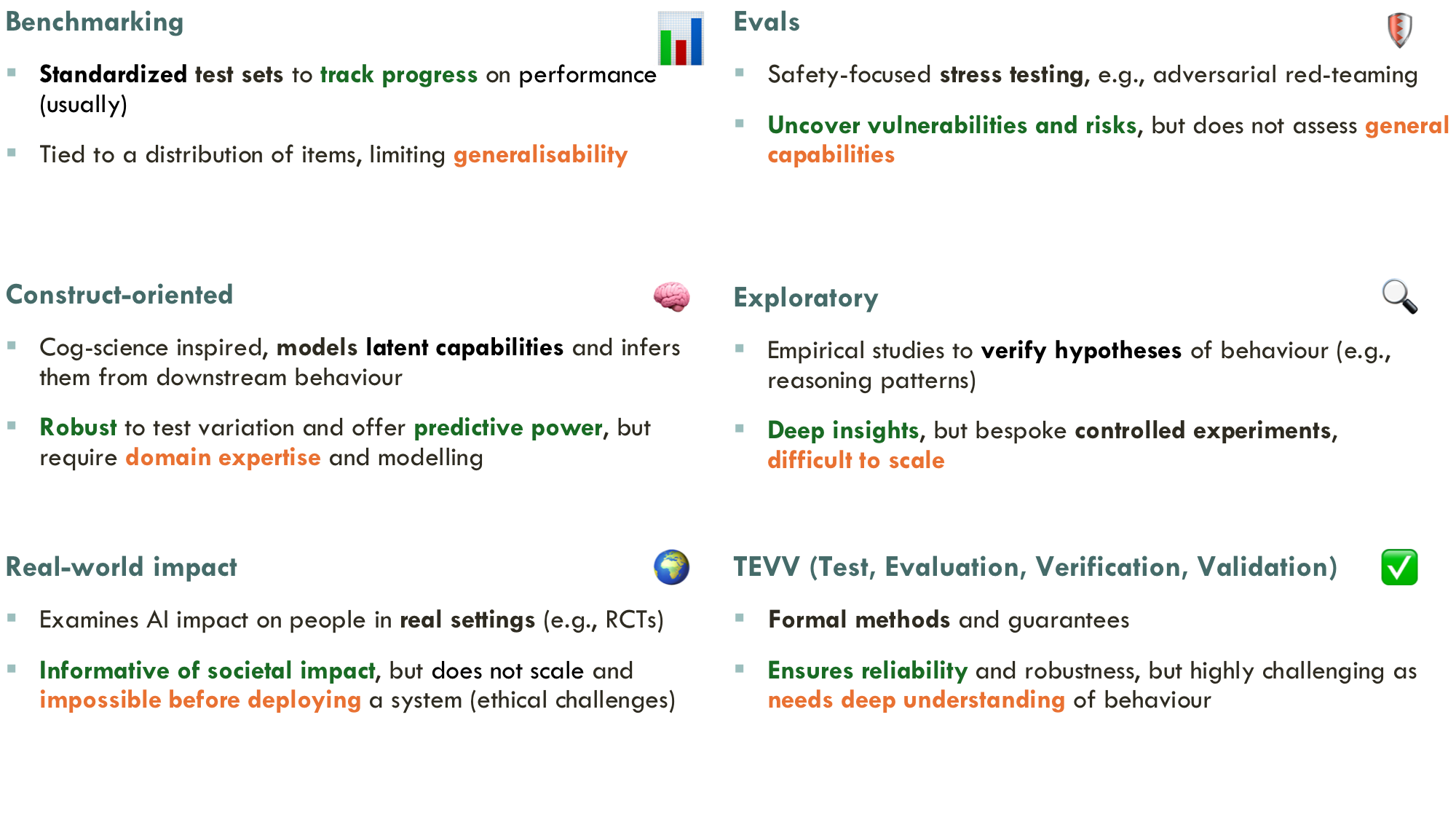

Research in AI evaluation has grown increasingly complex and multidisciplinary, attracting researchers with diverse backgrounds and objectives. As a result, divergent evaluation paradigms have emerged, often developing in isolation, adopting conflicting terminologies, and overlooking each other's contributions. This fragmentation has led to insular research trajectories and communication barriers both among different paradigms and with the general public, contributing to unmet expectations for deployed AI systems. To help bridge this insularity, in this paper we survey recent work in the AI evaluation landscape and identify six main paradigms. We characterise major recent contributions within each paradigm across key dimensions related to their goals, methodologies and research cultures. By clarifying the unique combination of questions and approaches associated with each paradigm, we aim to increase awareness of the breadth of current evaluation approaches and foster cross-pollination between different paradigms. We also identify potential gaps in the field to inspire future research directions.

The graph shows a UMAP projection of the Jaccard distance matrix, generated using the dimensions from our framework. Each point represents a surveyed paper, whose colour indicates the paradigms it belongs to. We find clusters of papers corresponding to different paradigms.

@misc{burden2025paradigmsaievaluationmapping,

title={Paradigms of {AI} Evaluation: Mapping Goals, Methodologies and Culture},

author={John Burden and Marko Tešić and Lorenzo Pacchiardi and José Hernández-Orallo},

year={2025},

eprint={2502.15620},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.15620},

}